How I leveraged a scientific paper on Multifractal Analysis to capture all of Dollar General’s US stores

Background

Before I dive into the spatial stuff, here’s some background on why I sought out to create an optimized spatial search.

I like to contribute to a neat Open Source Project called alltheplaces (more on this project in future posts). The goal of the project is pretty simple - collect all store locations into a single geospatial dataset anyone can use.

The DG Problem

Not every company is great about listing their store locations. Sometimes companies have a list of stores that’s easy to parse with a spider, other times they just provide a store lookup that takes a postal code and spits out the nearest stores.

Enter Dollar General (DG).

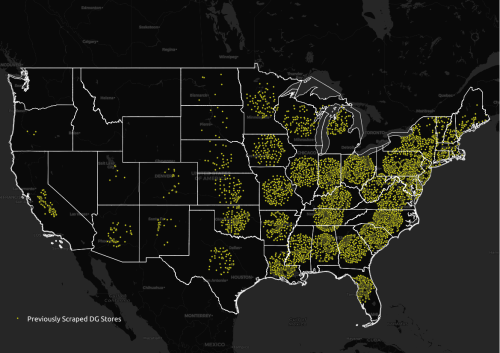

A scraper for DG already existed, but I noticed after mapping the data only the stores within a 100-mile radius of the center of each state were actually collected.

Weird, right? So what was going on?

The scraper was asking for stores in each state, but only using the coordinates of the center of each state.

Potential Fixes

Okay, so we need a solution that gives us national coverage for all stores.

Option 1: Use Zipcodes

Sounds like a good approach at first but… the downside to this idea is a volume problem. There are ~ 42,000 zip codes in the US. That’s nearly 3x the number of Dollar General stores!

With a zip-code based search, we would be sending way too many requests to their website every second, dramatically slowing down the site.

Duplicates also become a major problem. With zipcodes we would be collecting every store within 100 miles of each zipcode. There would be lots of overlap between nearby postal codes.

Option 2: Create our own search grid

I couldn’t be the first person to encounter this problem right?

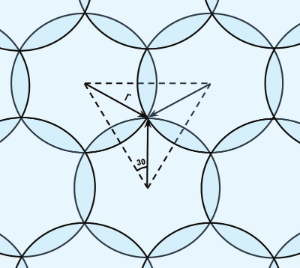

So I looked for scientific research papers related to optimizing search grids and found this awesome paper - Barycentric fixed-mass method for multifractal analysis by Kamer et al.

Using the methods contained in the paper above, I developed a Python script to generate a grid of minimally overlapping circles - each representing a 100mi search radius.

(Source: Kramer et al)

(Source: Kramer et al)

The centroid coordinates of each search radius are used in place of zipcodes for store locators that allow searches by coordinates.

This drastically reduces the number of requests that are sent to the website from 42,000 to 773.

That’s a 98% decrease!!!

Because each search radius is minimally overlapping (~13%), the number of duplicates returned in each request is also greatly reduced.

Win win.

Results

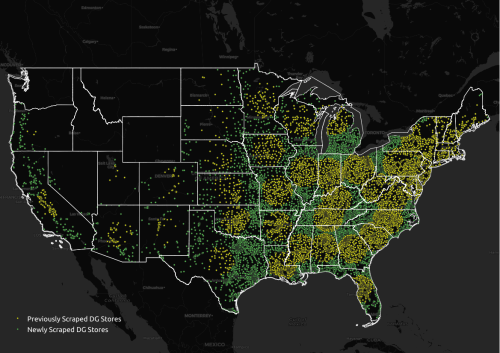

The locations represented in green were all the new locations we were able to capture thanks to the optimized search grid. We were missing so much data originally! Our California data in particular is in much better shape now.

Newly Captured DG Stores